Artificial intelligence is evolving rapidly, and Qwen-2.5 by Alibaba represents the cutting edge of this revolution. With its robust capabilities in natural language processing (NLP), multimodal support, and adaptability for specific domains, Qwen-2.5 stands out in the world of open-source AI models. Below, we explore its features, benchmark results, applications, and how users can leverage it effectively both online and locally.

Key Features and Capabilities of Qwen-2.5

Natural Language Processing (NLP) Excellence

Qwen-2.5’s NLP capabilities shine across tasks like content summarization, sentiment analysis, and structured data processing. With a context length of up to 128,000 tokens, it handles extensive inputs effortlessly. Its support for 29+ languages, including English, Chinese, and Arabic, ensures global applicability for users across industries. These enhancements are backed by a large dataset of 18 trillion tokens and rigorous training for accurate outputs. For more technical details, explore the Qwen-2.5 official documentation

Multimodal Integration

Qwen-2.5 supports language, image, and audio processing, enabling advanced applications like text-image generation and video-based question answering. This multimodal capability allows for innovations in areas such as AR/VR, education, and accessibility technologies. Its advanced vision-language model even competes with proprietary tools like OpenAI’s DALL-E

Specialized Domain Models

Qwen-2.5 offers task-specific versions like:

- Qwen-2.5-Coder: Excelling in coding and debugging tasks, achieving benchmark parity with GPT-4 in many scenarios.

- Qwen-2.5-Math: Tailored for mathematical reasoning and outperforming larger models like Meta’s Llama in tasks requiring advanced reasoning.

Scalability and Efficiency

Qwen-2.5 comes in sizes ranging from 0.5B to 72B parameters, catering to both lightweight local deployments and resource-intensive enterprise applications. Its architecture is optimized for efficiency, ensuring faster inferences without sacrificing accuracy.

Historical Development of Qwen Models

Alibaba’s Qwen models have progressively evolved, with Qwen-2.5 being a significant leap from its predecessors. Earlier iterations focused on NLP and small-scale multimodal tasks, but Qwen-2.5 introduces large-scale integration, specialized domain focus, and a highly efficient architecture. These updates solidify its position as a competitor to GPT-4-level proprietary models

Benchmark Performance

Competitive Results

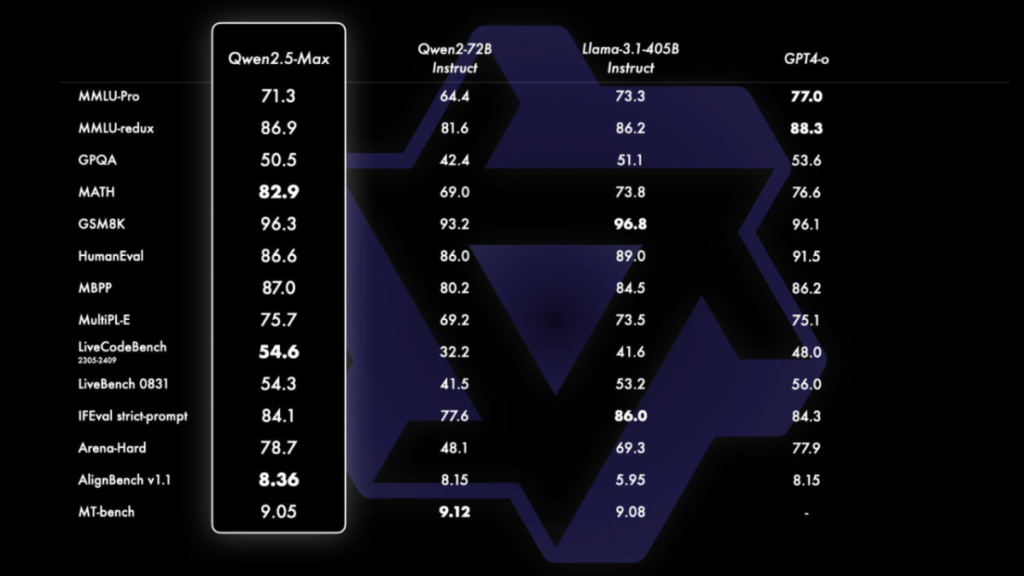

Qwen-2.5 dominates in benchmarks like:

- MATH: Scoring 83.1, it leads open-source peers.

- LiveCodeBench: Outperforming GPT-4 on many coding tasks.

- MMLU: Achieving superior results in multilingual comprehension GitHubDailyAI.

Open-Source Leadership

Compared to Meta’s Llama or Google’s Bard, Qwen-2.5 offers competitive accuracy while maintaining the openness of its source code. Its strong coding and math results make it particularly appealing for developers. Explore its benchmark comparisons in this detailed report

How to Use Qwen-2.5 Online

Cloud Access

Qwen-2.5 is hosted on platforms like ModelScope, offering seamless integration through APIs. This allows developers to embed its capabilities into their applications with minimal setup effort. Businesses can also utilize pre-trained versions for rapid deployment.

Cost-Effective Solutions

As an open-source model, Qwen-2.5 democratizes access to cutting-edge AI. Cloud solutions enable pay-as-you-go models, ensuring affordability for startups and researchers. Details on these pricing structures are available in the ModelScope guide

Deploying Qwen-2.5 Locally

System Requirements

For local deployment, Qwen-2.5 requires high-performance hardware, including NVIDIA GPUs like RTX 3090 or A100 and at least 64 GB RAM for larger configurations. Smaller models like the 3B variant are suitable for less demanding setups

Running the Model

Using frameworks like Hugging Face Transformers, users can fine-tune Qwen-2.5 for domain-specific tasks. Its quantized variants (e.g., Int8) ensure performance optimization on resource-limited hardware. Comprehensive setup guides are provided in the official GitHub repository.

Future Directions

Looking ahead, Alibaba plans to enhance Qwen-2.5 with:

- Broader language support.

- Improved efficiency for edge deployments.

- Enhanced multimodal capabilities for audio and video applications.

These updates aim to cement Qwen-2.5 as a leader in open-source AI, providing unparalleled versatility for global users

FAQ

What is Qwen-2.5 and how does it differ from earlier models?

Qwen-2.5 is the latest iteration of Alibaba’s Qwen large language model series, offering improved NLP, multimodal capabilities, and task-specific variants like Qwen-2.5-Coder and Qwen-2.5-Math. Compared to earlier versions, it supports a broader context length (up to 128K tokens) and has enhanced efficiency and accuracy

Is Qwen-2.5 free to use?

Yes, Qwen-2.5 is open-source, and its smaller variants can be deployed for free on local machines. However, cloud services offering Qwen-2.5 (e.g., via ModelScope) may involve subscription or usage-based fees

What hardware is needed to run Qwen-2.5 locally?

Deploying Qwen-2.5 requires high-performance GPUs like NVIDIA RTX 3090 or A100 and at least 64 GB of RAM for larger models. Smaller versions, such as the 3B variant, can run on less demanding setups

How does Qwen-2.5 compare to other models like GPT-4 or Llama?

Qwen-2.5 offers comparable or superior performance in specific tasks like coding (LiveCodeBench) and mathematics (MATH benchmark). While GPT-4 remains a leader in proprietary models, Qwen-2.5 stands out for its open-source accessibility and competitive efficiency

Can I fine-tune Qwen-2.5 for custom tasks?

Yes, Qwen-2.5 can be fine-tuned using frameworks like Hugging Face Transformers. This flexibility allows users to tailor the model for specific domains, such as healthcare or finance

Where can I access Qwen-2.5 for experimentation?

You can download Qwen-2.5 from GitHub or Hugging Face for local deployments. For cloud-based usage, platforms like ModelScope provide hosted versions with API access